A quick disclaimer: any references to pop culture and literature below are more defined with sources cited within the author’s original work, if you’re interested in digging in deeper.

Admittedly I haven’t written a book review or film review in about 15 years, but here it goes.

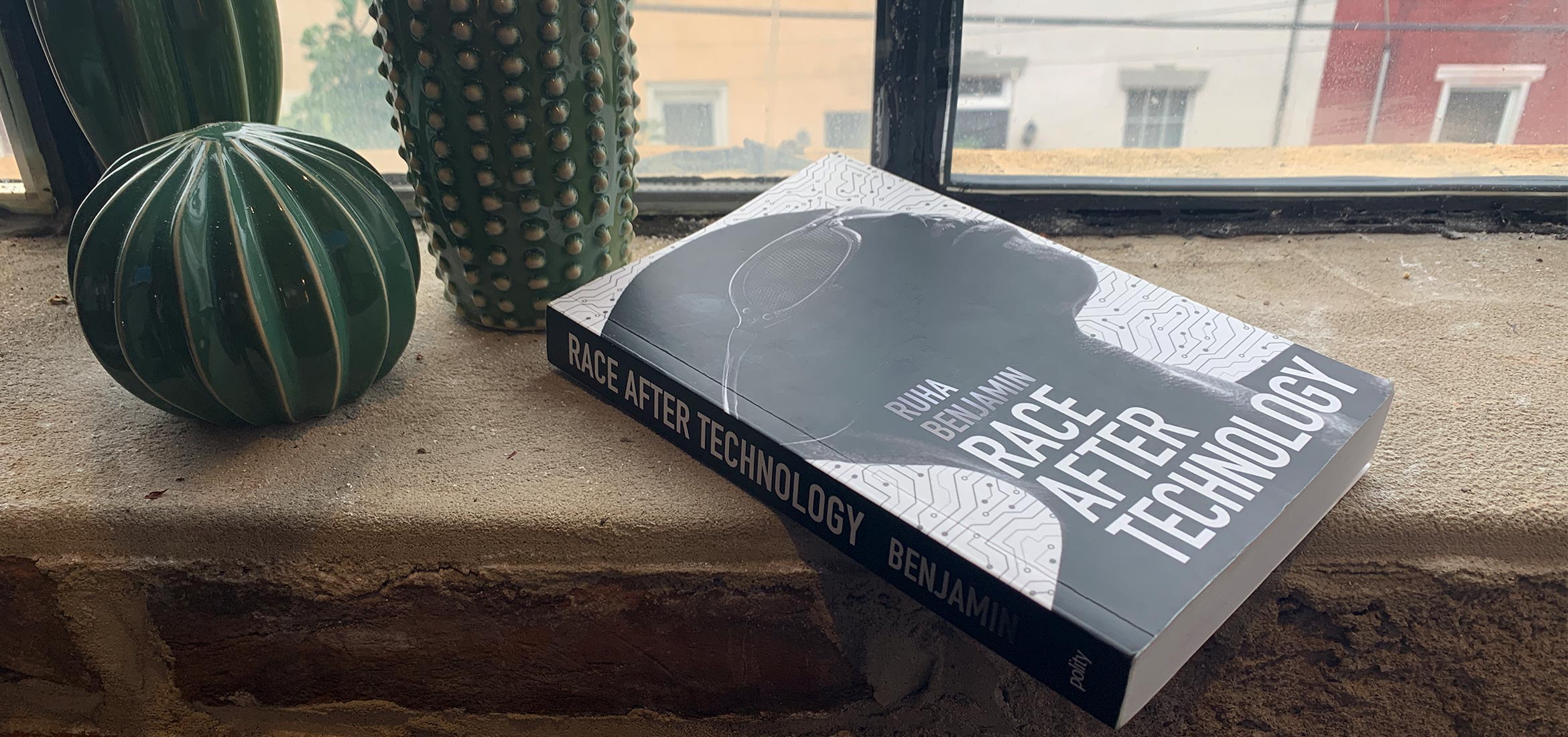

Last month, while driving through New Jersey, I stumbled upon a radio interview with Princeton professor, Ruha Benjamin. She was mentioning a new book she published, and as someone that makes websites all day, the subtitle caught my attention: “Abolitionist Tools for the New Jim Code.”

At risk of explaining the obvious (plus oversimplifying), the Jim Crow laws defined racial segregation in the US from 1865 until 1968, about a hundred years, post-slavery, of enforced inequality in the South as well as the rest of the country (despite not all states having the same laws).

Fast forward to 2010, and Michelle Alexander (a lawyer and educator) coins the term “the New Jim Crow” in her seminal book, “The New Jim Crow: Mass Incarceration in the Age of Colorblindness,” which equates the present prison machine’s (and all aspects of US culture that are tangentially related) systemic racism with the original Jim Crow period. The book also sets the stage for Benjamin to dig deeper into the tech sphere in “Race After Technology,” building upon Alexander’s lexicon and research.

Despite clocking in at 285 pages–having healthy page margins, a 48-page introduction, and nearly 85 pages of back matter (acknowledgments, references, footnotes) make the book approachable and a relatively quick read. It does start to get increasingly dense as it progresses, but that may be the case with most scholarly writing.

But moving onto the most important part, Benjamin structures her narrative into five chapters:

1. ENGINEERED INEQUITY: ARE ROBOTS RACIST?

Using a handful of introductory examples, Benjamin lays out very problematic recent scenarios where race inequality (or direct racism) was/is present in artificial intelligence (AI) and algorithms. Some of the memorable examples include an AI-powered online beauty pageant (http://beauty.ai/) which did not select any darker-skinned contestants as finalists or winners, a Google algorithm that was labelling images of people with darker skin tones as “gorillas,” and China’s social credit system being akin to a “Black Mirror” episode. The building question here is in the vein of, “is it possible to avoid inequalities when people’s biases and prevailing culture always inform the origin of supposedly ‘colorblind’ algorithms and scripts that drive every aspect of online experiences and beyond?”

2. DEFAULT DISCRIMINATION: IS THE GLITCH SYSTEMIC?

Benjamin further drives home proof that the digital default is to discriminate, in part referencing the déjà vu moments within “The Matrix.” She makes an analogy of a detectible error (or glitch) being an indication that there’s an unjust architect at play behind the scenes; it is a glimpse of a larger system that oppresses people of color, hidden behind code (akin to Keanu Reeves’ character seeing a cat appear twice by accident being visual evidence of a glitch in the simulation). She departs a bit from the overarching digital focus in mentioning Paula Dean’s public racism, and some anecdotes about Robert Moses’ sweeping urban planning often segregating lower income urban families (often Black families) from affluent areas. Perhaps these examples serve to define various moments of offline, cultural racism, empowering and informing online counterparts. Returning to the web, she mentions that someone noticed, in 2016, that searching Google for images of “three Black teenagers” resulted in nearly all mugshots. “Three White teenagers” produced happy stock photos, and lastly, “three Asian teenagers” displayed barely-clothed girls and women. There’s a big problem here.

3. CODED EXPOSURE: IS VISIBILITY A TRAP?

This chapter takes an interesting turn. First, Benjamin establishes the presence of a default “Whiteness” to digital experiences which, in turn makes people of color invisible (at one point certain HP web cams were unable to track movement of darker-skinned individuals, and it’s been shown that facial recognition performs best on users that look like the software’s programmers). Then, she makes the point that gaining visibility can in fact be negative and dangerous, versus remaining invisible. This ends up being the worst in the context of racial surveillance, and numerous methods of using AI to determine potential criminality based on someone’s appearance. This coded exposure also manifests itself outside of the US in several other examples.

4. TECHNOLOGICAL BENEVOLENCE: DO FIXES FIX US?

Whenever I hear the word “benevolence,” I have to look it up to confirm I’m thinking of the right word. What seems to trip me up is that it’s intended to be a positive concept (well-meaning, kindness) and yet, it often feels like it is used tongue-in-cheek to signify only the outward appearance of being well-meaning. And that is how Benjamin is using it here—the “fixes” of electronic monitoring of “offenders” (including ankle bracelets for recently-detained families near the US border with Mexico, and similar devices for monitoring parolees) is not a real fix for a deeply flawed, racism system. Perhaps one of the most eye-opening moments of the book for me is learning about Diversity Inc., and how the company can determine anyone’s race with a set of digital criteria (one key data point being a person’s zip code), and then sell that data to other companies. This determination is not always accurate, but it allows companies to target advertising and attention to individuals based on assumptions of what their race must be.

5. RETOOLING SOLIDARITY, REIMAGINING JUSTICE

Starting to bring the book to a close, Benjamin starts to offer some solutions, though also indicates a risk in publicly announcing all ways to fight the previously discussed inequity. Meaning, there can be value in keeping certain strategies more hidden (she references Frederick Douglass’ criticism of people proudly revealing the certain paths along the underground railroad used to escape from slavery – that risks making it harder for others to also escape). But one suggestion she makes is to perform ‘equity audits’ on algorithms. Another way of disrupting the status quo is the way Hyphen-Labs is making earrings that record interactions with the police, and visors that stop facial recognition software.

In its concluding pages, Benjamin ends the book with a bit of hope that it is possible to acknowledge and resist The New Jim Code.

I’d recommend this book to anyone interested in learning more about the hidden systems that define, categorize, and impact futures of people, without permission or ethical oversight. The structure and language at times do feel like a college textbook, but that doesn’t diminish the power of the research Ruha Benjamin brings to the table. We all share a role in the shaping of the internet, so let’s do everything possible to steer it in fair and just ways.

*1, 2, 3: Illustration by Christian Debuque for Pixel Parlor.